contents

Blogs / 5 Things you Should Consider When Building an Enterprise Chat Assistant

5 Things you Should Consider When Building an Enterprise Chat Assistant

May 15, 2024 • Matthew Duong • Self Hosting; • 3 min read

Imagine you're an executive at a fast-growing startup, scrambling to prepare for an imminent board meeting. As usual, you forgot to prepare, and the calendar alert just reminded you 30 minutes before the meeting starts. In a panic, you quickly message your assistant, “Hey Bob, what’s our liquidity ratio for the last year?” Within seconds, you receive a detailed, accurate response that saves the day. This seamless interaction isn't a stroke of luck—it's the result of meticulous and sophisticated planning by the engineering team.

At Truewind, we are developing a sophisticated chat assistant for an enterprise client. This assistant is designed to handle complex tool requests through simple prompts, delivering executive-level responses like "What is my liquidity ratio for the last year?". Here are five challenges and considerations we've tackled during the development process.

1. Streaming Responses

One of the things that is immediately noticeable when using a chat application is the response time. Due to the nature of how LLMs build up responses (in a sequence token by token basis) a typical response can take between 15-30 seconds to complete. Obviously having a 30 second delay before receiving response is unacceptable from a user experience perspective.

To enhance the perceived responsiveness, we implement streaming responses. This approach ensures that users receive immediate feedback, even if the entire response is not yet complete. By streaming responses at roughly the same speed that users read, we provide value almost instantaneously, significantly improving the user experience.

2. Handling Structured Data

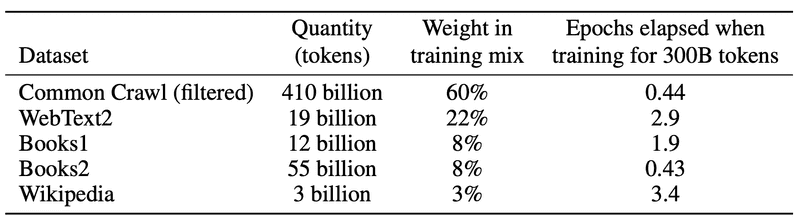

A lesser-known detail about LLMs is that their performance—both in terms of latency and accuracy—is significantly influenced by the structure of the data they receive. This is because their performance is based on the volume of data that it trained on specifically in the corresponding format. Consequently, natural text is often the most efficient format for LLMs, though we've also found that JSON works reasonably well since the models we use have been trained on code.

When handling interactions between the model and tool calls, we manage exceptions internally and avoid returning full tracebacks. This ensures that users receive clear and concise information. Additionally, we sometimes transform outputs into more efficient formats to optimize the LLM's performance.

3. Parallelizing Tool Requests

Some user prompts necessitate multiple tool calls to gather all the required information. For example, a customer might request a comprehensive report that requires data to be retrieved from multiple sources. Sequentially processing each data call can negatively impact the customer experience.

Instead of sequentially processing each request, where we can we fire them off in parallel. This approach significantly reduces the overall response time, providing our customers with a more responsive experience.

4. Utilizing Nested Models

One of the challenges we faced is the efficient allocation of resources. Using powerful models for every task can quickly exhaust our budget and token limits, especially for simple tasks that don't require such advanced capabilities.

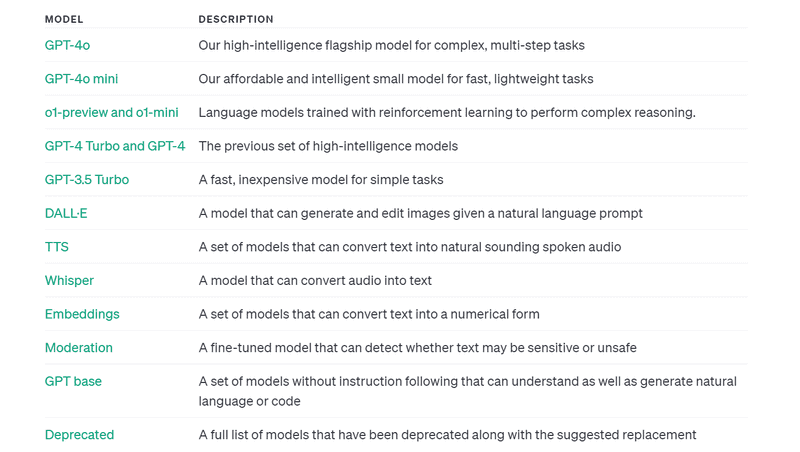

To address this, we dynamically switch between models based on the complexity of the task. A robust, larger model oversees the overall process, ensuring accuracy and coherence. For simpler, routine tasks that require quick confirmations, we employ faster, less resource-intensive models (e.g., GPT-3.5). This approach optimizes both speed and cost-efficiency, ensuring that users receive timely responses without compromising on the quality of more complex interactions.

5. Interpreting Arguments

Incorrect arguments to tool calls are a common and frustrating issue. While most of these errors stem from user input, the LLM can occasionally make mistakes as well. These incorrect arguments can significantly degrade the user experience. For instance, if a customer requests the cash balance for account "A" but receives the message "Assistant was unable to retrieve cash balance". This experience would be extremely frustrating.

At Truewind, we have guardrails to guide the LLM when calling tools. All arguments are thoroughly sanitized within the to prevent errors. For instance, if the system expects "111" (account class for cash equivalents) but receives "cash equivalents," our assistant will detect the discrepancy, provide a clear error message, and guide the user toward the correct format. Additionally, the assistant may confirm the interpreted request with the user to ensure accuracy before proceeding. This confirmation is especially important for requests that modify or perform destructive actions as well as provide feedback as to what parameters it has interpreted.

The Experience

Truewind's chat assistant delivers timely, accurate, and user-friendly interactions, thanks to the sophisticated engineering and meticulous planning from Renato and Caio. There's a lot happening under the hood, allowing executives to receive high-quality, executive-level responses in critical moments, ensuring a seamless and efficient experience for our enterprise clients.