Blogs / My Experience Running HeadJobs: Generative AI at Home

My Experience Running HeadJobs: Generative AI at Home

October 03, 2023 • Matthew Duong • Kubernetes;Self Hosting; Software Engineering • 2 min read

The Problem: The High Cost of Cloud Computing and non-existent inventory

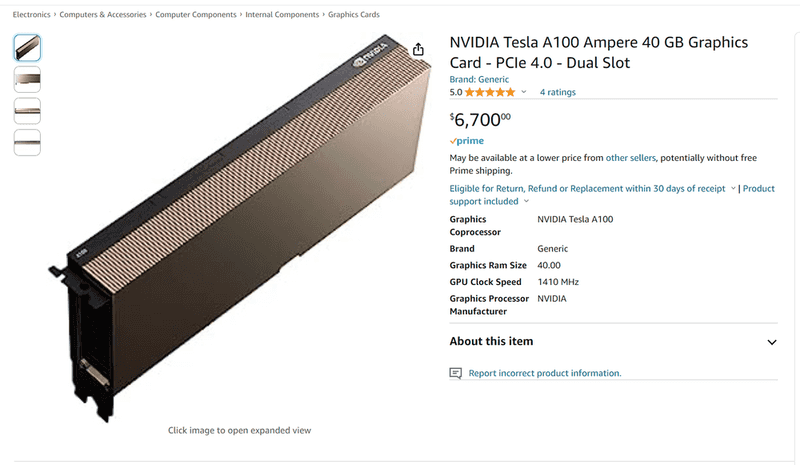

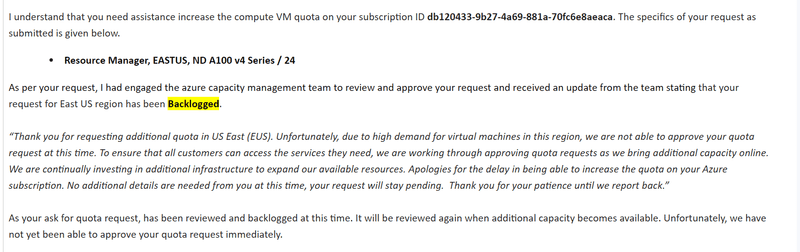

As the administrator of Headbot, a generative AI project that creates personalised AI avatars, I found myself grappling with the exorbitant costs and limitations of cloud-based GPU services. Running GPU nodes on Google, Azure, and AWS could set me back between $1-2 per hour. These numbers quickly add up, especially when you're on a tight budget and can't afford data centre-level GPU nodes.

Cloud providers have also been consistently out of capacity for GPU quota since December 2022. It was clear that a different solution was needed.

The Concept of "HeadJobs": The Engine Behind Headbot

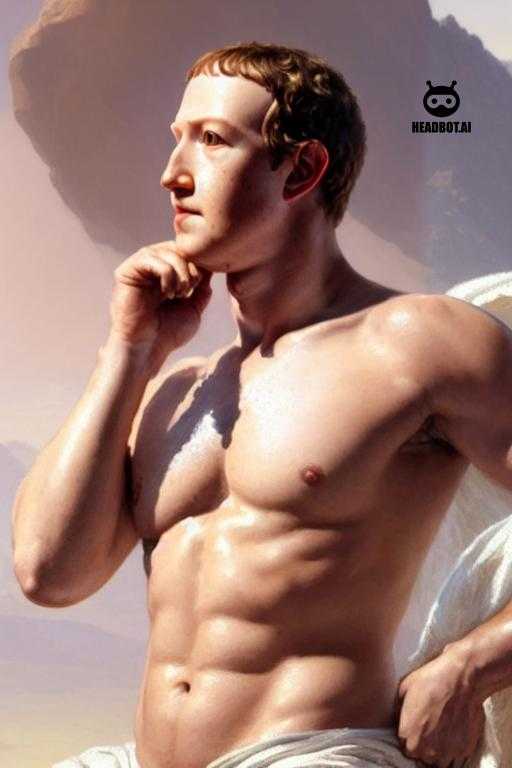

The core function lies in Headbot Jobs or what I've named "HeadJobs'' for short. These are specific computational tasks spun up to create personalised AI avatars. Once you upload 10+ portraits of yourself, a HeadJob kicks into gear. It's trained to capture your unique facial and body features, right down to your preferred clothing styles. Each HeadJob spends time learning what “you” look like. It will absorb your facial expressions, hair and even your fashion sense.

My Infrastructure: Consumer GPUs on Home Servers

My cost-effective yet powerful solution runs on my home server, utilising Kubernetes on RKE2. The setup includes three Dell R730xd nodes to run the web app and API, along with two RTX 3090 GPUs for the heavy lifting—i.e., running the actual generative AI jobs. These 3090s are a godsend; not only are they cost-efficient, but they also offer just the right performance to handle one Headbot job per node.

The Spiky Nature of Workloads

Due to the high variability of incoming jobs, there are times I need to scramble for additional computational resources. This is where my buddy John comes in with his spare RTX 4090 node, which we utilise during peak demands.

The Technicalities: Software, Drivers, and More

Maintaining this setup isn't a walk in the park, even with all the cost advantages. One of the major challenges lies in keeping the GPU drivers up-to-date, for which I use Lambda Stack. The customer GPU jobs are executed using PyTorch, and each job is specifically designed to capture not just facial features but body features and clothing styles as well.

The Fun of It: A Problem for the Sake of Problem-Solving

Let's be clear: running Headbot this way isn't solving a world-crisis level problem. It's essentially a problem concocted for the sheer joy of solving it—turning AI and machine learning into a form of high-tech artistry where predefined shapes and poses are painted with your personalised features. This isn’t going to be the next Silicon Valley Unicorn.

The Hidden Complexities: A Snapshot of the Challenges

Running this setup isn't plug-and-play. It requires intricate Kubernetes configurations, real-time monitoring for spiky workloads, and constant driver updates via Lambda Stack. Each component needs fine-tuning and ongoing attention, making it a far cry from a "set and forget" operation.

The Verdict: Do It Yourself or Try Headbot

If you're not keen on dealing with the hassles and complexities of setting up your own generative AI project, you might want to check out Headbot. But if you're up for the challenge, running your own cluster can save you substantial amounts of money. In my case, the cost comes down to just 3 cents per hour—peanuts compared to what you'd shell out on cloud platforms.

So, are you up for the challenge, or would you rather take the easy route and create your personalised AI avatar on Headbot? The choice is yours.

Feel free to create your own AI avatars with Headbot, or if you're interested in the nitty-gritty, stay tuned for more detailed breakdowns of my setup.